Overview:-

Client-server is a computing architecture which separates a client from a server. Each client or server connected to a network can also be referred to as a node. The most basic type of client-server architecture employs only two types of nodes: clients and servers. This type of architecture is sometimes referred to as two-tier.

Each instance of the client software can send data requests to one or more connected servers. In turn, the servers can accept these requests, process them, and return the requested information to the client. Although this concept can be applied for a variety of reasons to many different kinds of applications, the architecture remains fundamentally the same.

These days, clients are most often web browsers. Servers typically include web servers, database servers and mail servers. Online gaming is usually client-server too.

Characteristics of a client:-

• Request sender is known as client

• Initiates requests

• Waits and receives replies.

• Usually connects to a small number of servers at one time

• Typically interacts directly with end-users using a graphical user interface

Characteristics of a server:-

• Receiver of request which is send by client

• Upon receipt of requests, processes them and then serves replies

• Usually accepts connections from a large number of clients

• Typically does not interact directly with end-users

The following are the examples of client/server architectures:-

1) Two tier architectures

In two tier client/server architectures, the user interface is placed at user's desktop environment and the database management system services are usually in a server that is a more powerful machine that provides services to the many clients. Information processing is split between the user system interface environment and the database management server environment. The database management server supports for stored procedures and triggers. Software vendors provide tools to simplify development of applications for the two tier client/server architecture.

2) Multi-tiered architecture

Some designs are more sophisticated and consist of three different kinds of nodes: clients, application servers which process data for the clients and database servers which store data for the application servers. This configuration is called three-tier architecture, and is the most commonly used type of client-server architecture. Designs that contain more than two tiers are referred to as multi-tiered or n-tiered.

The advantage of n-tiered architectures is that they are far more scalable, since they balance and distribute the processing load among multiple, often redundant, specialized server nodes. This in turn improves overall system performance and reliability, since more of the processing load can be accommodated simultaneously.

The disadvantages of n-tiered architectures include more load on the network itself, due to a greater amount of network traffic, more difficult to program and test than in two-tier architectures because more devices have to communicate in order to complete a client's request.

Advantages of client-server architecture:-

In most cases, client-server architecture enables the roles and responsibilities of a computing system to be distributed among several independent computers that are known to each other only through a network. This creates an additional advantage to this architecture: greater ease of maintenance. For example, it is possible to replace, repair, upgrade, or even relocate a server while its clients remain both unaware and unaffected by that change. This independence from change is also referred to as encapsulation.

All the data is stored on the servers, which generally have far greater security controls than most clients. Servers can better control access and resources, to guarantee that only those clients with the appropriate permissions may access and change data.

Since data storage is centralized, updates to those data are far easier to administer.

It functions with multiple different clients of different capabilities.

Disadvantages of client-server architecture :-

Traffic congestion on the network has been an issue since the inception of the client-server paradigm. As the number of simultaneous client requests to a given server increases, the server can become severely overloaded.

The client-server paradigm lacks the robustness. Under client-server, should a critical server fail, clients’ requests cannot be fulfilled.

Friday, December 28, 2007

Wednesday, December 26, 2007

Translating from ASCII to EBCDIC:

Almost all network communications use the ASCII character set, but the AS/400 natively uses the EBCDIC character set. Clearly, once we're sending and receiving data over the network, we'll need to be able to translate between the two.

There are many different ways to translate between ASCII and EBCDIC. The API that we'll use to do this is called QDCXLATE, and you can find it in IBM's information center at the following link: http://publib.boulder.ibm.com/pubs/html/as400/v4r5/ic2924/info/apis/QDCXLATE.htm

There are other APIs that can be used to do these conversions. In particular, the iconv() set of APIs does really a good job, however, QDCXLATE is the easiest to use, and will work just fine for our purposes.

The QDCXLATE API takes the following parameters:

Parm# Description Usage Data Type

1 Length of data to convert Input Packed (5,0)

2 Data to convert I/O Char (*)

3 Conversion table Input Char (10)

And, since QDCXLATE is an OPM API, we actually call it as a program. Traditionally, you'd call an OPM API with the RPG 'CALL' statement, like this:

C CALL 'QDCXLATE'

C PARM 128 LENGTH 5 0

C PARM DATA 128

C PARM 'QTCPEBC' TABLE 10

However, I find it easier to code program calls using prototypes, just as I use for procedure calls. So, when I call QDCXLATE, I will use the following syntax:

D Translate PR ExtPgm('QDCXLATE')

D Length 5P 0 const

D Data 32766A options(*varsize)

D Table 10A const

C callp Translate(128: Data: 'QTCPEBC')

There are certain advantages to using the prototyped call. The first, and most obvious, is that each time we want to call the program, we can do it in one line of code. The next is that the 'const' keyword allows the compiler to automatically convert expressions or numeric variables to the data type required by the call. Finally, the prototype allows the compiler to do more thorough syntax checking when calling the procedure.

There are two tables that we will use in our examples, QTCPASC and QTCPEBC. These tables are easy to remember if we just keep in mind that the table name specifies the character set that we want to translate the data into. In other words 'QTCPEBC' is the IBM-supplied table for translating TCP to EBCDIC (from ASCII) and QTCPASC is the IBM supplied table for translating TCP data to ASCII (from EBCDIC).

There are many different ways to translate between ASCII and EBCDIC. The API that we'll use to do this is called QDCXLATE, and you can find it in IBM's information center at the following link: http://publib.boulder.ibm.com/pubs/html/as400/v4r5/ic2924/info/apis/QDCXLATE.htm

There are other APIs that can be used to do these conversions. In particular, the iconv() set of APIs does really a good job, however, QDCXLATE is the easiest to use, and will work just fine for our purposes.

The QDCXLATE API takes the following parameters:

Parm# Description Usage Data Type

1 Length of data to convert Input Packed (5,0)

2 Data to convert I/O Char (*)

3 Conversion table Input Char (10)

And, since QDCXLATE is an OPM API, we actually call it as a program. Traditionally, you'd call an OPM API with the RPG 'CALL' statement, like this:

C CALL 'QDCXLATE'

C PARM 128 LENGTH 5 0

C PARM DATA 128

C PARM 'QTCPEBC' TABLE 10

However, I find it easier to code program calls using prototypes, just as I use for procedure calls. So, when I call QDCXLATE, I will use the following syntax:

D Translate PR ExtPgm('QDCXLATE')

D Length 5P 0 const

D Data 32766A options(*varsize)

D Table 10A const

C callp Translate(128: Data: 'QTCPEBC')

There are certain advantages to using the prototyped call. The first, and most obvious, is that each time we want to call the program, we can do it in one line of code. The next is that the 'const' keyword allows the compiler to automatically convert expressions or numeric variables to the data type required by the call. Finally, the prototype allows the compiler to do more thorough syntax checking when calling the procedure.

There are two tables that we will use in our examples, QTCPASC and QTCPEBC. These tables are easy to remember if we just keep in mind that the table name specifies the character set that we want to translate the data into. In other words 'QTCPEBC' is the IBM-supplied table for translating TCP to EBCDIC (from ASCII) and QTCPASC is the IBM supplied table for translating TCP data to ASCII (from EBCDIC).

Tuesday, December 25, 2007

Basics of UML:

In the field of software engineering, the Unified Modeling Language (UML) is a standardized specification language for object modeling. UML is a general-purpose modeling language that includes a graphical notation used to create an abstract model of a system, referred to as a UML model.

UML diagrams represent three different views of a system model:

Functional requirements view

Emphasizes the functional requirements of the system from the user's point of view.

Includes use case diagrams.

Static structural view

Emphasizes the static structure of the system using objects, attributes, operations, and relationships.

Includes class diagrams and composite structure diagrams.

Dynamic behavior view

Emphasizes the dynamic behavior of the system by showing collaborations among objects and changes to the internal states of objects.

Includes sequence diagrams, activity diagrams and state machine diagrams.

There are 13 different types of diagrams in UML.

Structure diagrams emphasize what things must be in the system being modeled:

• Class diagram

• Component diagram

• Composite structure diagram

• Deployment diagram

• Object diagram

• Package diagram

Behavior diagrams emphasize what must happen in the system being modeled:

• Activity diagram

• State Machine diagram

• Use case diagram

Interaction diagrams, a subset of behavior diagrams, emphasize the flow of control and data among the things in the system being modeled:

• Communication diagram

• Interaction overview diagram

• Sequence diagram

• UML Timing Diagram

UML is not restricted to modeling software. UML is also used for business process modeling, systems engineering modeling and representing organizational structures.

UML diagrams represent three different views of a system model:

Functional requirements view

Emphasizes the functional requirements of the system from the user's point of view.

Includes use case diagrams.

Static structural view

Emphasizes the static structure of the system using objects, attributes, operations, and relationships.

Includes class diagrams and composite structure diagrams.

Dynamic behavior view

Emphasizes the dynamic behavior of the system by showing collaborations among objects and changes to the internal states of objects.

Includes sequence diagrams, activity diagrams and state machine diagrams.

There are 13 different types of diagrams in UML.

Structure diagrams emphasize what things must be in the system being modeled:

• Class diagram

• Component diagram

• Composite structure diagram

• Deployment diagram

• Object diagram

• Package diagram

Behavior diagrams emphasize what must happen in the system being modeled:

• Activity diagram

• State Machine diagram

• Use case diagram

Interaction diagrams, a subset of behavior diagrams, emphasize the flow of control and data among the things in the system being modeled:

• Communication diagram

• Interaction overview diagram

• Sequence diagram

• UML Timing Diagram

UML is not restricted to modeling software. UML is also used for business process modeling, systems engineering modeling and representing organizational structures.

Delaying a job by less than a second:

How do we check if new records have been added to a physical file?

There are ways to wait for the latest record to be added, for instance, using end of file delay (OVRDBF with EOFDLY), but this is equivalent to having a delayed job should no record be found, and try to read it again. It is also possible to couple a data queue to a file and send a message to the data queue every time a record is added to the file. This in turn will "wake up" the batch job and make it read the file.

The easiest way to poll a file would be to reposition to the start and read a record every time the end of file is met. But this is not a good solution as jobs continually polling a file in this way will take far too much CPU and slow the system down. Delay must be introduced. The simplest way is to add a delay job (DLYJOB) every time an end of file condition is met. But DLYJOB is not perfect. The minimum time you can delay a job with it is one second. You can delay a job by only a number of seconds, not a fraction of a second.

One second is fine in most cases, but sometimes, you can't afford to wait for one second and you can't afford not to wait. This is where a C function comes in handy. "pthread_delay_np" delays a thread for a number of nanoseconds!

This API is written in C and therefore expects parameters in a specific format.

D timeSpec ds

D seconds 10i 0

D nanoseconds 10i 0

I declared the API as follows:

D delay pr 5i 0 extProc('pthread_delay_np')

d * value

The API expects a pointer to the timespec definition. It also returns a non-zero value if a problem occurred (which is unlikely if you are passing a valid timespec).

A specimen code:

c eval seconds = 0

c eval nanoseconds = 10000000

c eval return = delay(%addr(timeSpec))

c if return <> 0

c callP (e) system('DLYJOB 1')

c endIf

There are ways to wait for the latest record to be added, for instance, using end of file delay (OVRDBF with EOFDLY), but this is equivalent to having a delayed job should no record be found, and try to read it again. It is also possible to couple a data queue to a file and send a message to the data queue every time a record is added to the file. This in turn will "wake up" the batch job and make it read the file.

The easiest way to poll a file would be to reposition to the start and read a record every time the end of file is met. But this is not a good solution as jobs continually polling a file in this way will take far too much CPU and slow the system down. Delay must be introduced. The simplest way is to add a delay job (DLYJOB) every time an end of file condition is met. But DLYJOB is not perfect. The minimum time you can delay a job with it is one second. You can delay a job by only a number of seconds, not a fraction of a second.

One second is fine in most cases, but sometimes, you can't afford to wait for one second and you can't afford not to wait. This is where a C function comes in handy. "pthread_delay_np" delays a thread for a number of nanoseconds!

This API is written in C and therefore expects parameters in a specific format.

D timeSpec ds

D seconds 10i 0

D nanoseconds 10i 0

I declared the API as follows:

D delay pr 5i 0 extProc('pthread_delay_np')

d * value

The API expects a pointer to the timespec definition. It also returns a non-zero value if a problem occurred (which is unlikely if you are passing a valid timespec).

A specimen code:

c eval seconds = 0

c eval nanoseconds = 10000000

c eval return = delay(%addr(timeSpec))

c if return <> 0

c callP (e) system('DLYJOB 1')

c endIf

Sunday, December 23, 2007

Useful Functions in DDS:

Data description specifications (DDS) provide a powerful and convenient way to describe data attributes in file descriptions external to the application program that processes the data. People are always uncovering ways, however, for DDS do more than you thought was possible.

1. Resizing a field:

Have you ever been in the position where you wanted to rename a field or change the size of it for programming purposes? For example suppose you wanted to read a file that had packed fields sized 17,2 into a program that had a field size of 8,0. You can do this easily enough in your DDS file definition by building a logical file with the field a different size. The field will automatically be truncated and resized into the 8,0 field unless the field is too large fit. Then that will result in an error.

Physical File: TEST1

A R GLRXX

*

A TSTN01 17P 2 TEXT('PERIOD 1 AMOUNT')

A TSTN02 17P 2 TEXT('PERIOD 2 AMOUNT')

A TSTN03 17P 2 TEXT('PERIOD 3 AMOUNT')

A TSTN04 17P 2 TEXT('PERIOD 4 AMOUNT')

Logical File: TEST2

A R GLRXX PFILE(TEST1)

*

A TSTN01 8P 0

A TSTN02 8P 0

A TSTN03 8P 0

A TSTN04 8P 0

2. RENAME function:

If you want to rename the field for RPG or CLP programming purposes, just create the field in DDS with the new name and use the RENAME function on the old field. The field can then be resized at the same time. Using the same physical file TEST1.

Logical File: TEST3

A R GLRBX PFILE(TEST1)

*

A TSTN05 8P 0 RENAME(TSTN01)

A TSTN06 8P 0 RENAME(TSTN02)

A TSTN07 8P 0 RENAME(TSTN03)

A TSTN08 8P 0 RENAME(TSTN04)

3. Creating a key field for a joined logical using the SST function:

Another neat trick if you are building joined logical files is to use partial keys or a sub-stringed field for the join. Example: Say the secondary file has a field (CSTCTR) and you want to join it to your primary file but the key field to make the join execute doesn't exist in the primary file. The key portion is embedded within a field in the primary file (CTRACC). Use the SST function on the field containing the key data and extract what will be needed for the join (XXCC). The XXCC field is then used in the join to the secondary file CTRXRFP. The "I" field in the definition represents that it is used for input only.

R GLRXX JFILE(GLPCOM CTRXRFP)

J JOIN(1 2)

JFLD(XXCC CSTCTR)

WDCO RENAME(BXCO)

WDCOCT I SST(CTRACC 1 10)

WDEXP I SST(CTREXP 12 11)

WDACCT RENAME(CTRACC)

XXCC I SST(CTRACC 5 6)

4. Concatenating fields using the CONCAT function:

Another trick for building a field that doesn't exist in your logical file is to use the CONCAT function. Example: You want to create a field FSTLST (first and last name) from 2 fields FIRST and LAST. This can be done as follows:

FIRST R

LAST R

FSTLST CONCAT(FIRST LAST)

5. Using the RANGE function:

In your logical file you may want to select a range of records rather than using the select function to select individual records. Example: You want only the records in your logical file where the selected field is in the range 100 and 900. This can be done as follows:

S XXPG# RANGE('100' '900')

You can also use the RANGE function on multiple ranges.

6. Using the VALUES function:

In your logical file you may want to select specific records that have certain values by using the VALUES function. Example: You want only the records in your logical file where the selected field has the values 'O', 'P', and 'E'. This can be done as follows:

S RPTCTR VALUES('O ' 'P ' 'E ')

1. Resizing a field:

Have you ever been in the position where you wanted to rename a field or change the size of it for programming purposes? For example suppose you wanted to read a file that had packed fields sized 17,2 into a program that had a field size of 8,0. You can do this easily enough in your DDS file definition by building a logical file with the field a different size. The field will automatically be truncated and resized into the 8,0 field unless the field is too large fit. Then that will result in an error.

Physical File: TEST1

A R GLRXX

*

A TSTN01 17P 2 TEXT('PERIOD 1 AMOUNT')

A TSTN02 17P 2 TEXT('PERIOD 2 AMOUNT')

A TSTN03 17P 2 TEXT('PERIOD 3 AMOUNT')

A TSTN04 17P 2 TEXT('PERIOD 4 AMOUNT')

Logical File: TEST2

A R GLRXX PFILE(TEST1)

*

A TSTN01 8P 0

A TSTN02 8P 0

A TSTN03 8P 0

A TSTN04 8P 0

2. RENAME function:

If you want to rename the field for RPG or CLP programming purposes, just create the field in DDS with the new name and use the RENAME function on the old field. The field can then be resized at the same time. Using the same physical file TEST1.

Logical File: TEST3

A R GLRBX PFILE(TEST1)

*

A TSTN05 8P 0 RENAME(TSTN01)

A TSTN06 8P 0 RENAME(TSTN02)

A TSTN07 8P 0 RENAME(TSTN03)

A TSTN08 8P 0 RENAME(TSTN04)

3. Creating a key field for a joined logical using the SST function:

Another neat trick if you are building joined logical files is to use partial keys or a sub-stringed field for the join. Example: Say the secondary file has a field (CSTCTR) and you want to join it to your primary file but the key field to make the join execute doesn't exist in the primary file. The key portion is embedded within a field in the primary file (CTRACC). Use the SST function on the field containing the key data and extract what will be needed for the join (XXCC). The XXCC field is then used in the join to the secondary file CTRXRFP. The "I" field in the definition represents that it is used for input only.

R GLRXX JFILE(GLPCOM CTRXRFP)

J JOIN(1 2)

JFLD(XXCC CSTCTR)

WDCO RENAME(BXCO)

WDCOCT I SST(CTRACC 1 10)

WDEXP I SST(CTREXP 12 11)

WDACCT RENAME(CTRACC)

XXCC I SST(CTRACC 5 6)

4. Concatenating fields using the CONCAT function:

Another trick for building a field that doesn't exist in your logical file is to use the CONCAT function. Example: You want to create a field FSTLST (first and last name) from 2 fields FIRST and LAST. This can be done as follows:

FIRST R

LAST R

FSTLST CONCAT(FIRST LAST)

5. Using the RANGE function:

In your logical file you may want to select a range of records rather than using the select function to select individual records. Example: You want only the records in your logical file where the selected field is in the range 100 and 900. This can be done as follows:

S XXPG# RANGE('100' '900')

You can also use the RANGE function on multiple ranges.

6. Using the VALUES function:

In your logical file you may want to select specific records that have certain values by using the VALUES function. Example: You want only the records in your logical file where the selected field has the values 'O', 'P', and 'E'. This can be done as follows:

S RPTCTR VALUES('O ' 'P ' 'E ')

Thursday, December 20, 2007

Business Process Management:

Business Process Management (BPM) is an emerging field of knowledge and research at the intersection between management and information technology, encompassing methods, techniques and tools to design, enact, control, and analyze operational business processes involving humans, organizations, applications, documents and other sources of information.

BPM covers activities performed by organizations to manage and, if necessary, to improve their business processes. BPM systems monitor the execution of the business processes so that managers can analyze and change processes in response to data, rather than just a hunch. In short, Business Process Management is a management model that allows the organizations to manage their processes as any other assets and improve and manage them over the period of time.

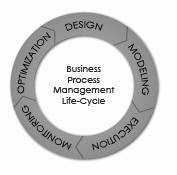

The activities which constitute business process management can be grouped into five categories: Design, Modeling, Execution, Monitoring, and Optimization.

Process Design encompasses the following:

1. (optionally) The capture of existing processes and documenting their design in terms of Process Map / Flow, Actors, Alerts & Notifications, Escalations, Standard Operating Procedures, Service Level Agreements and task hand-over mechanisms

2. Design the "to-be" process covering all the above process and ensure that a correct and efficient design is theoretically prepared.

Process Modeling encompasses taking the process design and introducing different cost, resource, and other constraint scenarios to determine how the process will operate under different circumstances.

The traditional way to automate processes is to develop or purchase an application that executes the required steps of the process.

Monitoring encompasses the tracking of individual processes so that information on their state can be easily seen and the provision of statistics on the performance of one or more processes.

Process optimization includes retrieving process performance information from modeling or monitoring phase and identifying the potential or actual bottlenecks and potential rooms for cost savings or other improvements and then applying those enhancements in the design of the process thus continuing the value cycle of business process management.

In a medium to large organization scenario, a good business process management system allows business to accommodate day to day changes in business processes due to competitive, regulatory or market challenges in business processes without overly relying IT departments.

Wednesday, December 19, 2007

Testing Techniques:

1) Black-box Testing: Testing that verifies the item being tested when given the appropriate input provides the expected results.

2) Boundary-value testing: Testing of unusual or extreme situations that an item should be able to handle.

3) Class testing: The act of ensuring that a class and its instances (objects) perform as defined.

4) Class-integration testing: The act of ensuring that the classes, and their instances, form some software performs as defined.

5) Code review: A form of technical review in which the deliverable being reviewed is source code.

6) Component testing: The act of validating that a component works as defined.

7) Coverage testing: The act of ensuring that every line of code is exercised at least once.

8) Design review: A technical review in which a design model is inspected.

9) Inheritance-regression testing: The act of running the test cases of the super classes, both direct and indirect, on a given subclass.

10) Integration testing: Testing to verify several portions of software work together.

11) Method testing: Testing to verify a method (member function) performs as defined.

12) Model review: An inspection, ranging anywhere from a formal technical review to an informal walkthrough, by others who were not directly involved with the development of the model.

13) Path testing: The act of ensuring that all logic paths within your code are exercised at least once.

14) Prototype review: A process by which your users work through a collection of use cases, using a prototype as if it was the real system. The main goal is to test whether the design of the prototype meets their needs.

15) Prove it with code : The best way to determine if a model actually reflects what is needed, or what should be built, is to actually build software based on that model that show that the model works.

16) Regression testing: The acts of ensuring that previously tested behaviors still work as expected after changes have been made to an application.

17) Stress testing: The act of ensuring that the system performs as expected under high volumes of transactions, users, load, and so on.

18) Usage scenario testing: A testing technique in which one or more person(s) validate a model by acting through the logic of usage scenarios.

19) User interface testing: The testing of the user interface (UI) to ensure that it follows accepted UI standards and meets the requirements defined for it. Often referred to as graphical user interface (GUI) testing.

20) White-box testing: Testing to verify that specific lines of code work as defined. Also referred to as clear-box testing.

2) Boundary-value testing: Testing of unusual or extreme situations that an item should be able to handle.

3) Class testing: The act of ensuring that a class and its instances (objects) perform as defined.

4) Class-integration testing: The act of ensuring that the classes, and their instances, form some software performs as defined.

5) Code review: A form of technical review in which the deliverable being reviewed is source code.

6) Component testing: The act of validating that a component works as defined.

7) Coverage testing: The act of ensuring that every line of code is exercised at least once.

8) Design review: A technical review in which a design model is inspected.

9) Inheritance-regression testing: The act of running the test cases of the super classes, both direct and indirect, on a given subclass.

10) Integration testing: Testing to verify several portions of software work together.

11) Method testing: Testing to verify a method (member function) performs as defined.

12) Model review: An inspection, ranging anywhere from a formal technical review to an informal walkthrough, by others who were not directly involved with the development of the model.

13) Path testing: The act of ensuring that all logic paths within your code are exercised at least once.

14) Prototype review: A process by which your users work through a collection of use cases, using a prototype as if it was the real system. The main goal is to test whether the design of the prototype meets their needs.

15) Prove it with code : The best way to determine if a model actually reflects what is needed, or what should be built, is to actually build software based on that model that show that the model works.

16) Regression testing: The acts of ensuring that previously tested behaviors still work as expected after changes have been made to an application.

17) Stress testing: The act of ensuring that the system performs as expected under high volumes of transactions, users, load, and so on.

18) Usage scenario testing: A testing technique in which one or more person(s) validate a model by acting through the logic of usage scenarios.

19) User interface testing: The testing of the user interface (UI) to ensure that it follows accepted UI standards and meets the requirements defined for it. Often referred to as graphical user interface (GUI) testing.

20) White-box testing: Testing to verify that specific lines of code work as defined. Also referred to as clear-box testing.

Tuesday, December 18, 2007

Query for the sounded results:

The Soundex function returns a 4-character code representing the sound of the words in the argument. The result can be compared with the sound of other strings.

The argument can be any string, but not a BLOB.

The data type of the result is CHAR(4). If the argument can be null, the result can be null; if the argument is null, the result is the null value.

The Soundex function is useful for finding strings for which the sound is known but the precise spelling is not. It makes assumptions about the way that letters and combinations of letters sound that can help to search out words with similar sounds.

The comparison can be done directly or by passing the strings as arguments to the Difference function.

Example:

Run the following query:

SELECT eename

FROM ckempeff

WHERE SOUNDEX (eename)=SOUNDEX ('Plips')

Query Result:

PHILLIPS, KRISTINA M

PLUVIOSE, NORIANIE

PHILLIPS, EDWARD D

PHILLIP, SHANNON L

PHELPS, PATRICIA E

PHILLIPS, KORI A

POLIVKA, BETTY M

PHELPS, AMY R

PHILLIPS III, CHARLES R

The argument can be any string, but not a BLOB.

The data type of the result is CHAR(4). If the argument can be null, the result can be null; if the argument is null, the result is the null value.

The Soundex function is useful for finding strings for which the sound is known but the precise spelling is not. It makes assumptions about the way that letters and combinations of letters sound that can help to search out words with similar sounds.

The comparison can be done directly or by passing the strings as arguments to the Difference function.

Example:

Run the following query:

SELECT eename

FROM ckempeff

WHERE SOUNDEX (eename)=SOUNDEX ('Plips')

Query Result:

PHILLIPS, KRISTINA M

PLUVIOSE, NORIANIE

PHILLIPS, EDWARD D

PHILLIP, SHANNON L

PHELPS, PATRICIA E

PHILLIPS, KORI A

POLIVKA, BETTY M

PHELPS, AMY R

PHILLIPS III, CHARLES R

Three Valued Indicator:

Now a variable can be off, on, or neither off nor on. A three-valued indicator? Here's how it's done.

Declare a two-byte character variable.

D SomeVar s 2a inz(*off)

SomeVar is *ON if it holds two ones, *OFF if it contains two zeros, and is neither *ON nor *OFF if it has one one and one zero.

Now you can code expressions like these:

if SomeVar = *on;

DoWhatever();

endif;

if SomeVar = *off;

DoThis();

endif;

if SomeVar <> *on and SomeVar <> *off;

DoSomething();

else;

DoSomethingElse();

endif;

if SomeVar = *on or SomeVar = *off;

DoSomething();

else;

DoSomethingElse();

endif;

Don't those last two ifs look weird? Believe it or not, it's possible for the else branches to execute.

Declare a two-byte character variable.

D SomeVar s 2a inz(*off)

SomeVar is *ON if it holds two ones, *OFF if it contains two zeros, and is neither *ON nor *OFF if it has one one and one zero.

Now you can code expressions like these:

if SomeVar = *on;

DoWhatever();

endif;

if SomeVar = *off;

DoThis();

endif;

if SomeVar <> *on and SomeVar <> *off;

DoSomething();

else;

DoSomethingElse();

endif;

if SomeVar = *on or SomeVar = *off;

DoSomething();

else;

DoSomethingElse();

endif;

Don't those last two ifs look weird? Believe it or not, it's possible for the else branches to execute.

Structural and Functional Testing

Structural testing is considered white-box testing because knowledge of the internal logic of the system is used to develop test cases. Structural testing includes path testing, code coverage testing and analysis, logic testing, nested loop testing, and similar techniques. Unit testing, string or integration testing, load testing, stress testing, and performance testing are considered structural.

Functional testing addresses the overall behavior of the program by testing transaction flows, input validation, and functional completeness. Functional testing is considered black-box testing because no knowledge of the internal logic of the system is used to develop test cases. System testing, regression testing, and user acceptance testing are types of functional testing.

Both methods together validate the entire system. For example, a functional test case might be taken from the documentation description of how to perform a certain function, such as accepting bar code input.

A structural test case might be taken from a technical documentation manual. To effectively test systems, both methods are needed. Each method has its pros and cons, which are listed below:

Structural Testing

Advantages

The logic of the software’s structure can be tested.

Parts of the software will be tested which might have been forgotten if only functional testing was performed.

Disadvantages

Its tests do not ensure that user requirements have been met.

Its tests may not mimic real-world situations.

Functional Testing

Advantages

Simulates actual system usage.

Makes no system structure assumptions.

Disadvantages

Potential of missing logical errors in software.

Possibility of redundant testing.

Functional testing addresses the overall behavior of the program by testing transaction flows, input validation, and functional completeness. Functional testing is considered black-box testing because no knowledge of the internal logic of the system is used to develop test cases. System testing, regression testing, and user acceptance testing are types of functional testing.

Both methods together validate the entire system. For example, a functional test case might be taken from the documentation description of how to perform a certain function, such as accepting bar code input.

A structural test case might be taken from a technical documentation manual. To effectively test systems, both methods are needed. Each method has its pros and cons, which are listed below:

Structural Testing

Advantages

The logic of the software’s structure can be tested.

Parts of the software will be tested which might have been forgotten if only functional testing was performed.

Disadvantages

Its tests do not ensure that user requirements have been met.

Its tests may not mimic real-world situations.

Functional Testing

Advantages

Simulates actual system usage.

Makes no system structure assumptions.

Disadvantages

Potential of missing logical errors in software.

Possibility of redundant testing.

Thursday, December 13, 2007

Quick Reference in TAATOOL:

DSPRPGHLP:

The Display RPG Help tool provides help text and samples for 1) RPG III operation codes and 2) RPG IV operation codes (both fixed and free form), Built-in functions, and H/F/D keywords. DSPRPGHLP provides a command interface to the help text which is normally accessed using SEU.

Other DSPRPGHLP commands include STRRPGHLP and PRTRPGHLP.

Escape messages:

None. Escape messages from based on functions will be re-sent.

Required Parameters:

Keyword (KWD):

The keyword to enter. The default is *ALL and may be used with any of the RPGTYPE entries. Op codes and BIFs are considered keywords.

A Built-in Function may be entered for TYPE (*RPGLEBIF). Because the % sign is not valid when using the prompter with a value such as %ADDR, you must quote the value such as '%ADDR'.

RPG type (RPGTYPE):

*ALLRPG is the default, but may only be used when KWD (*ALL) is specified.

If the entry is other than *ALLRPG, the KWD value entered must be found in the appropriate group. For example, KWD (ADD) may be entered for an RPGTYPE of *RPG or RPGLEOP, but is not valid for *RPGLEF. If the keyword cannot be found, a special display appears and allows an entry of a correct value.

*RPGLEOP should be entered for RPGLE operation codes.

*RPGLEBIF should be entered for RPGLE Built-in functions.

*RPGLEH should be entered for H Spec keywords.

*RPGLEF should be entered for F Spec keywords.

*RPGLED should be entered for D Spec keywords.

Or just press enter after entering the command DSPRPGHLP.

The Display RPG Help tool provides help text and samples for 1) RPG III operation codes and 2) RPG IV operation codes (both fixed and free form), Built-in functions, and H/F/D keywords. DSPRPGHLP provides a command interface to the help text which is normally accessed using SEU.

Other DSPRPGHLP commands include STRRPGHLP and PRTRPGHLP.

Escape messages:

None. Escape messages from based on functions will be re-sent.

Required Parameters:

Keyword (KWD):

The keyword to enter. The default is *ALL and may be used with any of the RPGTYPE entries. Op codes and BIFs are considered keywords.

A Built-in Function may be entered for TYPE (*RPGLEBIF). Because the % sign is not valid when using the prompter with a value such as %ADDR, you must quote the value such as '%ADDR'.

RPG type (RPGTYPE):

*ALLRPG is the default, but may only be used when KWD (*ALL) is specified.

If the entry is other than *ALLRPG, the KWD value entered must be found in the appropriate group. For example, KWD (ADD) may be entered for an RPGTYPE of *RPG or RPGLEOP, but is not valid for *RPGLEF. If the keyword cannot be found, a special display appears and allows an entry of a correct value.

*RPGLEOP should be entered for RPGLE operation codes.

*RPGLEBIF should be entered for RPGLE Built-in functions.

*RPGLEH should be entered for H Spec keywords.

*RPGLEF should be entered for F Spec keywords.

*RPGLED should be entered for D Spec keywords.

Or just press enter after entering the command DSPRPGHLP.

Software Metrics:

Software metrics can be classified into three categories:

1. Product metrics,

2. Process metrics, and

3. Project metrics.

Product metrics describe the characteristics of the product such as size, complexity, design features, performance, and quality level.

Process metrics can be used to improve software development and maintenance. Examples include the effectiveness of defect removal during development, the pattern of testing defect arrival, and the response time of the fix process.

Project metrics describe the project characteristics and execution. Examples include the number of software developers, the staffing pattern over the life cycle of the software, cost, schedule, and productivity.

Some metrics belong to multiple categories. For example, the in-process quality metrics of a project are both process metrics and project metrics.

Software quality metrics are a subset of software metrics that focus on the quality aspects of the product, process, and project. In general, software quality metrics are more closely associated with process and product metrics than with project metrics. Nonetheless, the project parameters such as the number of developers and their skill levels, the schedule, the size, and the organization structure certainly affect the quality of the product. Software quality metrics can be divided further into end-product quality metrics and in-process quality metrics. Examples include:

Product quality metrics

• Mean time to failure

• Defect density

• Customer-reported problems

• Customer satisfaction

In-process quality metrics

• Phase-based defect removal pattern

• Defect removal effectiveness

• Defect density during formal machine testing

• Defect arrival pattern during formal machine testing

When development of a software product is complete and it is released to the market, it enters the maintenance phase of its life cycle. During this phase the defect arrivals by time interval and customer problem calls (which may or may not be defects) by time interval are the de facto metrics.

1. Product metrics,

2. Process metrics, and

3. Project metrics.

Product metrics describe the characteristics of the product such as size, complexity, design features, performance, and quality level.

Process metrics can be used to improve software development and maintenance. Examples include the effectiveness of defect removal during development, the pattern of testing defect arrival, and the response time of the fix process.

Project metrics describe the project characteristics and execution. Examples include the number of software developers, the staffing pattern over the life cycle of the software, cost, schedule, and productivity.

Some metrics belong to multiple categories. For example, the in-process quality metrics of a project are both process metrics and project metrics.

Software quality metrics are a subset of software metrics that focus on the quality aspects of the product, process, and project. In general, software quality metrics are more closely associated with process and product metrics than with project metrics. Nonetheless, the project parameters such as the number of developers and their skill levels, the schedule, the size, and the organization structure certainly affect the quality of the product. Software quality metrics can be divided further into end-product quality metrics and in-process quality metrics. Examples include:

Product quality metrics

• Mean time to failure

• Defect density

• Customer-reported problems

• Customer satisfaction

In-process quality metrics

• Phase-based defect removal pattern

• Defect removal effectiveness

• Defect density during formal machine testing

• Defect arrival pattern during formal machine testing

When development of a software product is complete and it is released to the market, it enters the maintenance phase of its life cycle. During this phase the defect arrivals by time interval and customer problem calls (which may or may not be defects) by time interval are the de facto metrics.

Wednesday, December 12, 2007

Reorganize Physical File Member (RGZPFM):

Records are not physically removed from an Iseries table when using the DELETE opcode. Records are marked as deleted in the tables and the Iseries operating system knows not to allow them to be viewed. Anyway these deleted records stay in your tables and can end of taking up a lot of space if not managed. The way this is typically managed is with the IBM command RGZPFM. This command calls a program that looks for all the records in a specific table that have been marked for deletion and removes them. It then resets the relative record numbers (RRN) of all records in the file and rebuilds all the logical's.

If a keyed file is identified in the Key file (KEYFILE) parameter, the system reorganizes the member by changing the physical sequence of the records in storage to either match the keyed sequence of the physical file member's access path, or to match the access path of a logical file member that is defined over the physical file.

When the member is reorganized and KEYFILE(*NONE) is not specified, the sequence in which the records are actually stored is changed, and any deleted records are removed from the file. If KEYFILE(*NONE) is specified or defaulted, the sequence of the records does not change, but deleted records are removed from the member. Optionally, new sequence numbers and zero date fields are placed in the source fields of the records. These fields are changed after the member has been compressed or reorganized.

For example, the following Reorganize Physical File Member (RGZPFM) command reorganizes the first member of a physical file using an access path from a logical file:

RGZPFM FILE(DSTPRODLB/ORDHDRP)

KEYFILE(DSTPRODLB/ORDFILL ORDFILL)

The physical file ORDHDRP has an arrival sequence access path. It was reorganized using the access path in the logical file ORDFILL. Assume the key field is the Order field. The following illustrates how the records were arranged.

The following is an example of the original ORDHDRP file. Note that record 3 was deleted before the RGZPFM command was run:

Relative Record Number Cust Order Ordate

1 41394 41882 072480

2 28674 32133 060280

3 deleted record

4 56325 38694 062780

The following example shows the ORDHDRP file reorganized using the Order field as the key field in ascending sequence:

Relative Record Number Cust Order Ordate

1 28674 32133 060280

2 56325 38694 062780

3 41394 41882 072480

If a keyed file is identified in the Key file (KEYFILE) parameter, the system reorganizes the member by changing the physical sequence of the records in storage to either match the keyed sequence of the physical file member's access path, or to match the access path of a logical file member that is defined over the physical file.

When the member is reorganized and KEYFILE(*NONE) is not specified, the sequence in which the records are actually stored is changed, and any deleted records are removed from the file. If KEYFILE(*NONE) is specified or defaulted, the sequence of the records does not change, but deleted records are removed from the member. Optionally, new sequence numbers and zero date fields are placed in the source fields of the records. These fields are changed after the member has been compressed or reorganized.

For example, the following Reorganize Physical File Member (RGZPFM) command reorganizes the first member of a physical file using an access path from a logical file:

RGZPFM FILE(DSTPRODLB/ORDHDRP)

KEYFILE(DSTPRODLB/ORDFILL ORDFILL)

The physical file ORDHDRP has an arrival sequence access path. It was reorganized using the access path in the logical file ORDFILL. Assume the key field is the Order field. The following illustrates how the records were arranged.

The following is an example of the original ORDHDRP file. Note that record 3 was deleted before the RGZPFM command was run:

Relative Record Number Cust Order Ordate

1 41394 41882 072480

2 28674 32133 060280

3 deleted record

4 56325 38694 062780

The following example shows the ORDHDRP file reorganized using the Order field as the key field in ascending sequence:

Relative Record Number Cust Order Ordate

1 28674 32133 060280

2 56325 38694 062780

3 41394 41882 072480

Tuesday, December 11, 2007

On Vs Where:

Here is an invoicing data that we can use for our understanding. We have header information:

SELECT H.* FROM INVHDR AS H

Invoice Company Customer Date

47566 1 44 2004-05-03

47567 2 5 2004-05-03

47568 1 10001 2004-05-03

47569 7 777 2004-05-03

47570 7 777 2004-05-04

47571 2 5 2004-05-04

And we have related details:

SELECT D.* FROM INVDTL AS D

Invoice Line Item Price Quantity

47566 1 AB1441 25.00 3

47566 2 JJ9999 20.00 4

47567 1 DN0120 .35 800

47569 1 DC2984 12.50 2

47570 1 MI8830 .10 10

47570 2 AB1441 24.00 100

47571 1 AJ7644 15.00 1

Notice that the following query contains a selection expression in the WHERE clause:

SELECT H.INVOICE, H.COMPANY, H.CUSTNBR, H.INVDATE,

D.LINE, D.ITEM, D.QTY

FROM INVHDR AS H

LEFT JOIN INVDTL AS D

ON H.INVOICE = D.INVOICE

WHERE H.COMPANY = 1

Invoice Company Customer Date Line Item Quantity

47566 1 44 2004-05-03 1 AB1441 3

47566 1 44 2004-05-03 2 JJ9999 4

47568 1 10001 2004-05-03 - - -

The result set includes data for company one invoices only. If we move the selection expression to the ON clause:

SELECT H.INVOICE, H.COMPANY, H.CUSTNBR, H.INVDATE,

D.LINE, D.ITEM, D.QTY

FROM INVHDR AS H

LEFT JOIN INVDTL AS D

ON H.INVOICE = D.INVOICE

AND H.COMPANY = 1

Invoice Company Customer Date Line Item Quantity

47566 1 44 2004-05-03 1 AB1441 3

47566 1 44 2004-05-03 2 JJ9999 4

47567 2 5 2004-05-03 - - -

47568 1 10001 2004-05-03 - - -

47569 7 777 2004-05-03 - - -

47570 7 777 2004-05-04 - - -

47571 2 5 2004-05-04 - - -

This query differs from the previous one in that all invoice headers are in the resulting table, not just those for company number one. Notice that details are null for other companies, even though some of those invoices have corresponding rows in the details file. What’s going on?

Here’s the difference. When a selection expression is placed in the WHERE clause, the resulting table is created. Then the filter is applied to select the rows that are to be returned in the result set. When a selection expression is placed in the ON clause of an outer join, the selection expression limits the rows that will take part in the join, but for a primary table, the selection expression does not limit the rows that will be placed in the result set. ON restricts the rows that are allowed to participate in the join. In this case, all header rows are placed in the result set, but only company one header rows are allowed to join to the details.

SELECT H.* FROM INVHDR AS H

Invoice Company Customer Date

47566 1 44 2004-05-03

47567 2 5 2004-05-03

47568 1 10001 2004-05-03

47569 7 777 2004-05-03

47570 7 777 2004-05-04

47571 2 5 2004-05-04

And we have related details:

SELECT D.* FROM INVDTL AS D

Invoice Line Item Price Quantity

47566 1 AB1441 25.00 3

47566 2 JJ9999 20.00 4

47567 1 DN0120 .35 800

47569 1 DC2984 12.50 2

47570 1 MI8830 .10 10

47570 2 AB1441 24.00 100

47571 1 AJ7644 15.00 1

Notice that the following query contains a selection expression in the WHERE clause:

SELECT H.INVOICE, H.COMPANY, H.CUSTNBR, H.INVDATE,

D.LINE, D.ITEM, D.QTY

FROM INVHDR AS H

LEFT JOIN INVDTL AS D

ON H.INVOICE = D.INVOICE

WHERE H.COMPANY = 1

Invoice Company Customer Date Line Item Quantity

47566 1 44 2004-05-03 1 AB1441 3

47566 1 44 2004-05-03 2 JJ9999 4

47568 1 10001 2004-05-03 - - -

The result set includes data for company one invoices only. If we move the selection expression to the ON clause:

SELECT H.INVOICE, H.COMPANY, H.CUSTNBR, H.INVDATE,

D.LINE, D.ITEM, D.QTY

FROM INVHDR AS H

LEFT JOIN INVDTL AS D

ON H.INVOICE = D.INVOICE

AND H.COMPANY = 1

Invoice Company Customer Date Line Item Quantity

47566 1 44 2004-05-03 1 AB1441 3

47566 1 44 2004-05-03 2 JJ9999 4

47567 2 5 2004-05-03 - - -

47568 1 10001 2004-05-03 - - -

47569 7 777 2004-05-03 - - -

47570 7 777 2004-05-04 - - -

47571 2 5 2004-05-04 - - -

This query differs from the previous one in that all invoice headers are in the resulting table, not just those for company number one. Notice that details are null for other companies, even though some of those invoices have corresponding rows in the details file. What’s going on?

Here’s the difference. When a selection expression is placed in the WHERE clause, the resulting table is created. Then the filter is applied to select the rows that are to be returned in the result set. When a selection expression is placed in the ON clause of an outer join, the selection expression limits the rows that will take part in the join, but for a primary table, the selection expression does not limit the rows that will be placed in the result set. ON restricts the rows that are allowed to participate in the join. In this case, all header rows are placed in the result set, but only company one header rows are allowed to join to the details.

Monday, December 10, 2007

Pair Programming:

Pair programming is one of the most contentious practices of extreme programming (XP). The basic concept of pair programming, or "pairing," is two developers actively working together to build code. In XP, the rule is that you must produce all production code by virtue of pairing. The chief benefit touted by pairing proponents is improved code quality. Two heads are better than one. Note that pairing is a practice that you can use exclusively of XP. However it may require a cultural change in traditional software shops. Paying attention to explain the benefits and giving some guidance will help:

General benefits:

• Produces better code coverage. By switching pairs, developers understand more of the system.

• Minimizes dependencies upon personnel.

• Results in a more evenly paced, sustainable development rhythm.

• Can produce solutions more rapidly.

• Moves all team members to a higher level of skills and system understanding.

• Helps build a true team.

Specific benefits from a management standpoint:

• Reduces risk

• Shorter learning curve for new hires

• Can be used as interviewing criteria ("can we work with this guy?")

• Problems are far less hidden

• Helps ensure adherence to standards

• Cross-pollination/resource fluidity.

Specific benefits from an employee perspective:

• Awareness of other parts of the system

• Resume building

• Decreases time spent in review meetings

• Continuous education. Learn new things every day from even the most junior programmers.

• Provides the ability to move between teams.

• More rapid learning as a new hire.

Rules:

1. All production code must be developed by a pair.

2. It’s not one person doing all the work and another watching.

3. Switch keyboards several times an hour. The person without the keyboard should be thinking about the bigger picture and should be providing strategic direction.

4. Don’t pair more than 75% of your work day. Make sure you take breaks! Get up and walk around for a few minutes at least once an hour.

5. Switch pairs frequently, at least once a day.

General benefits:

• Produces better code coverage. By switching pairs, developers understand more of the system.

• Minimizes dependencies upon personnel.

• Results in a more evenly paced, sustainable development rhythm.

• Can produce solutions more rapidly.

• Moves all team members to a higher level of skills and system understanding.

• Helps build a true team.

Specific benefits from a management standpoint:

• Reduces risk

• Shorter learning curve for new hires

• Can be used as interviewing criteria ("can we work with this guy?")

• Problems are far less hidden

• Helps ensure adherence to standards

• Cross-pollination/resource fluidity.

Specific benefits from an employee perspective:

• Awareness of other parts of the system

• Resume building

• Decreases time spent in review meetings

• Continuous education. Learn new things every day from even the most junior programmers.

• Provides the ability to move between teams.

• More rapid learning as a new hire.

Rules:

1. All production code must be developed by a pair.

2. It’s not one person doing all the work and another watching.

3. Switch keyboards several times an hour. The person without the keyboard should be thinking about the bigger picture and should be providing strategic direction.

4. Don’t pair more than 75% of your work day. Make sure you take breaks! Get up and walk around for a few minutes at least once an hour.

5. Switch pairs frequently, at least once a day.

Writing Free form SQL Statements:

If you have the SQL Development Kit, you may very well be happy to know that V5R4 allows you to place your SQL commands in free-format calcs.

Begin the statement with EXEC SQL. Be sure both words are on the same line. Code the statement in free-format syntax across as many lines as you like, and end with a semicolon.

/free

exec sql

update SomeFile

set SomeField = :SomeValue

where AnotherField = :AnotherValue;

/end-free

Begin the statement with EXEC SQL. Be sure both words are on the same line. Code the statement in free-format syntax across as many lines as you like, and end with a semicolon.

/free

exec sql

update SomeFile

set SomeField = :SomeValue

where AnotherField = :AnotherValue;

/end-free

Friday, December 7, 2007

Enabling a "workstation time-out" feature in RPG:

There are five things required to provide a time-out option on any interactive workstation file. This capability allows an RPG program to receive control before an end-user presses a Function key or Enter. Some of the uses for this kind of function include:

Providing a marquee for a schedule via a subfile

Update the time displayed on the workstation at regular intervals

Refresh the information, such as a news display, periodically

As mentioned, there are five things required to achieve workstation time-out. Those five things are:

1. Add the INVITE keyword to the Workstation display file DDS. This is a file-level keyword.

2. Use the WAITRCD parameter of CRTDSPF to set the desired time-out period.

3. Add the MAXDEV(*FILE) keyword to the File specification for the Workstation device file.

4. Write the desired display file formats to the display using the normal methods.

5. Use the READ operation code to read Display File name, not the Record format name.

You must avoid using EXFMT to the display file as this operation code does not support workstation time-out.

FMarquee CF E WORKSTN MAXDEV(*FILE)

F SFILE(detail:rrn)

C Write Header

C Write Footer

C Do 12 rrn

C Write Detail

C enddo

C Write SFLCTLFMT

C Read Marquee

Providing a marquee for a schedule via a subfile

Update the time displayed on the workstation at regular intervals

Refresh the information, such as a news display, periodically

As mentioned, there are five things required to achieve workstation time-out. Those five things are:

1. Add the INVITE keyword to the Workstation display file DDS. This is a file-level keyword.

2. Use the WAITRCD parameter of CRTDSPF to set the desired time-out period.

3. Add the MAXDEV(*FILE) keyword to the File specification for the Workstation device file.

4. Write the desired display file formats to the display using the normal methods.

5. Use the READ operation code to read Display File name, not the Record format name.

You must avoid using EXFMT to the display file as this operation code does not support workstation time-out.

FMarquee CF E WORKSTN MAXDEV(*FILE)

F SFILE(detail:rrn)

C Write Header

C Write Footer

C Do 12 rrn

C Write Detail

C enddo

C Write SFLCTLFMT

C Read Marquee

Quick Reference in TAATOOL:

DSPRPGHLP:

The Display RPG Help tool provides help text and samples for 1) RPG III operation codes and 2) RPG IV operation codes (both fixed and free form), Built-in functions, and H/F/D keywords. DSPRPGHLP provides a command interface to the help text which is normally accessed using SEU.

Other DSPRPGHLP commands include STRRPGHLP and PRTRPGHLP.

Escape messages:

None. Escape messages from based on functions will be re-sent.

Required Parameters:

Keyword (KWD):

The keyword to enter. The default is *ALL and may be used with any of the RPGTYPE entries. Op codes and BIFs are considered keywords.

A Built-in Function may be entered for TYPE (*RPGLEBIF). Because the % sign is not valid when using the prompter with a value such as %ADDR, you must quote the value such as '%ADDR'.

RPG type (RPGTYPE):

*ALLRPG is the default, but may only be used when KWD (*ALL) is specified.

If the entry is other than *ALLRPG, the KWD value entered must be found in the appropriate group. For example, KWD (ADD) may be entered for an RPGTYPE of *RPG or RPGLEOP, but is not valid for *RPGLEF. If the keyword cannot be found, a special display appears and allows an entry of a correct value.

*RPGLEOP should be entered for RPGLE operation codes.

*RPGLEBIF should be entered for RPGLE Built-in functions.

*RPGLEH should be entered for H Spec keywords.

*RPGLEF should be entered for F Spec keywords.

*RPGLED should be entered for D Spec keywords.

Or just press enter after entering the command DSPRPGHLP.

The Display RPG Help tool provides help text and samples for 1) RPG III operation codes and 2) RPG IV operation codes (both fixed and free form), Built-in functions, and H/F/D keywords. DSPRPGHLP provides a command interface to the help text which is normally accessed using SEU.

Other DSPRPGHLP commands include STRRPGHLP and PRTRPGHLP.

Escape messages:

None. Escape messages from based on functions will be re-sent.

Required Parameters:

Keyword (KWD):

The keyword to enter. The default is *ALL and may be used with any of the RPGTYPE entries. Op codes and BIFs are considered keywords.

A Built-in Function may be entered for TYPE (*RPGLEBIF). Because the % sign is not valid when using the prompter with a value such as %ADDR, you must quote the value such as '%ADDR'.

RPG type (RPGTYPE):

*ALLRPG is the default, but may only be used when KWD (*ALL) is specified.

If the entry is other than *ALLRPG, the KWD value entered must be found in the appropriate group. For example, KWD (ADD) may be entered for an RPGTYPE of *RPG or RPGLEOP, but is not valid for *RPGLEF. If the keyword cannot be found, a special display appears and allows an entry of a correct value.

*RPGLEOP should be entered for RPGLE operation codes.

*RPGLEBIF should be entered for RPGLE Built-in functions.

*RPGLEH should be entered for H Spec keywords.

*RPGLEF should be entered for F Spec keywords.

*RPGLED should be entered for D Spec keywords.

Or just press enter after entering the command DSPRPGHLP.

Tuesday, December 4, 2007

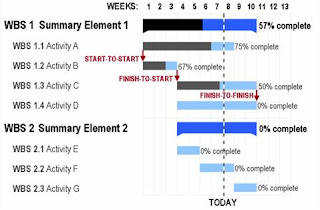

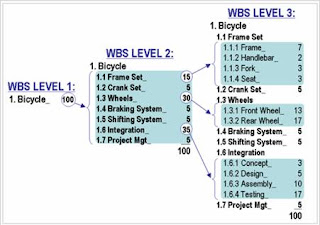

Gantt Charts:

A Gantt chart is a popular type of bar chart that illustrates a project schedule. Gantt charts illustrate the start and finish dates of the terminal elements and summary elements of a project. Terminal elements and summary elements comprise the work breakdown structure of the project. Some Gantt charts also show the dependency (i.e., precedence network) relationships between activities. Gantt charts can be used to show current schedule status using percent-complete shadings and a vertical "TODAY" line as shown here.

Gantt charts may be simple versions created on graph paper or more complex automated versions created using project management applications such as Microsoft Project or Excel.

Excel does not contain a built-in Gantt chart format; however, you can create a Gantt chart in Excel by customizing the stacked bar chart type.

Advantages:

• Gantt charts have become a common technique for representing the phases and activities of a project work breakdown structure (WBS), so they can be understood by a wide audience.

• A Gantt chart allows you to assess how long a project should take.

• A Gantt chart lays out the order in which tasks need to be carried out.

• A Gantt chart helps manage the dependencies between tasks.

• A Gantt chart allows you to see immediately what should have been achieved at a point in time.

• A Gantt chart allows you to see how remedial action may bring the project back on course.

Monday, December 3, 2007

TAATOOL Commands for copying Data Queues:

To get the data from one data queue and to get it populated to another data queue, there are some TAATOOL commands available that helps in achieving it. The following steps needs to be followed.

1. CVTDTAQ command copies the data queue data to the specified file. The Convert Data Queue command converts the entries from a keyed or non-keyed TYPE (*STD) data queue to an outfile named DTAQP. One record is written for each entry. The size of the entry field in the outfile is limited to 9,000 bytes. Data is truncated if it exceeds this amount.

The model file is TAADTQMP with a format name of DTAQR.

Run CVTDTAQ command by specifying the data queue name from which the data needs to be copied. Specify a file name to which the data needs to be populated.

2. CPYBCKDTAQ command copies the data from the file to the specified data queue. The Copy Back Data Queue command is intended for refreshing a data queue or duplicating the entries to a different data queue. You must first convert the entries in the data queue to the DTAQP file with the TAA CVTDTAQ command.

CPYBCKDTAQ then reads the data from the DTAQP file and uses the QSNDDTAQ API to send the entries to a named data queue. Both keyed and non-keyed data queues are supported.

Run CPYBCKDTAQ command by specifying the file that contains data and the data queue name to which the data needs to be populated.

1. CVTDTAQ command copies the data queue data to the specified file. The Convert Data Queue command converts the entries from a keyed or non-keyed TYPE (*STD) data queue to an outfile named DTAQP. One record is written for each entry. The size of the entry field in the outfile is limited to 9,000 bytes. Data is truncated if it exceeds this amount.

The model file is TAADTQMP with a format name of DTAQR.

Run CVTDTAQ command by specifying the data queue name from which the data needs to be copied. Specify a file name to which the data needs to be populated.

2. CPYBCKDTAQ command copies the data from the file to the specified data queue. The Copy Back Data Queue command is intended for refreshing a data queue or duplicating the entries to a different data queue. You must first convert the entries in the data queue to the DTAQP file with the TAA CVTDTAQ command.

CPYBCKDTAQ then reads the data from the DTAQP file and uses the QSNDDTAQ API to send the entries to a named data queue. Both keyed and non-keyed data queues are supported.

Run CPYBCKDTAQ command by specifying the file that contains data and the data queue name to which the data needs to be populated.

Sunday, December 2, 2007

Project Development Stages:

The project development process has the major stages: initiation, development, production or execution, and closing/maintenance.

Initiation

The initiation stage determines the nature and scope of the development. If this stage is not performed well, it is unlikely that the project will be successful in meeting the business’s needs. The key project controls needed here are an understanding of the business environment and making sure that all necessary controls are incorporated into the project. Any deficiencies should be reported and a recommendation should be made to fix them.

The initiation stage should include a cohesive plan that encompasses the following areas:

• Study analyzing the business needs in measurable goals.

• Review of the current operations.

• Conceptual design of the operation of the final product.

• Equipment requirement.

• Financial analysis of the costs and benefits including a budget.

• Select stake holders, including users, and support personnel for the project.

• Project charter including costs, tasks, deliverables, and schedule.

Planning and design

After the initiation stage, the system is designed. Occasionally, a small prototype of the final product is built and tested. Testing is generally performed by a combination of testers and end users, and can occur after the prototype is built or concurrently. Controls should be in place that ensures that the final product will meet the specifications of the project charter. The results of the design stage should include a product design that:

• Satisfies the project sponsor, end user, and business requirements.

• Functions as it was intended.

• Can be produced within quality standards.

• Can be produced within time and budget constraints.

Closing and maintenance

Closing includes the formal acceptance of the project and the ending thereof. Administrative activities include the archiving of the files and documenting lessons learned.

Maintenance is an ongoing process, and it includes:

• Continuing support of end users

• Correction of errors

• Updates of the software over time

In this stage, auditors should pay attention to how effectively and quickly user problems are resolved.

Initiation

The initiation stage determines the nature and scope of the development. If this stage is not performed well, it is unlikely that the project will be successful in meeting the business’s needs. The key project controls needed here are an understanding of the business environment and making sure that all necessary controls are incorporated into the project. Any deficiencies should be reported and a recommendation should be made to fix them.

The initiation stage should include a cohesive plan that encompasses the following areas:

• Study analyzing the business needs in measurable goals.

• Review of the current operations.

• Conceptual design of the operation of the final product.

• Equipment requirement.

• Financial analysis of the costs and benefits including a budget.

• Select stake holders, including users, and support personnel for the project.

• Project charter including costs, tasks, deliverables, and schedule.

Planning and design

After the initiation stage, the system is designed. Occasionally, a small prototype of the final product is built and tested. Testing is generally performed by a combination of testers and end users, and can occur after the prototype is built or concurrently. Controls should be in place that ensures that the final product will meet the specifications of the project charter. The results of the design stage should include a product design that:

• Satisfies the project sponsor, end user, and business requirements.

• Functions as it was intended.

• Can be produced within quality standards.

• Can be produced within time and budget constraints.

Closing and maintenance

Closing includes the formal acceptance of the project and the ending thereof. Administrative activities include the archiving of the files and documenting lessons learned.

Maintenance is an ongoing process, and it includes:

• Continuing support of end users

• Correction of errors

• Updates of the software over time

In this stage, auditors should pay attention to how effectively and quickly user problems are resolved.

Thursday, November 29, 2007

Smoke Testing:

Smoke testing is a term used in plumbing, woodwind repair, electronics, and computer software development. It refers to the first test made after repairs or first assembly to provide some assurance that the system under test will not catastrophically fail. After a smoke test proves that the pipes will not leak, the keys seal properly, the circuit will not burn, or the software will not crash outright, the assembly is ready for more stressful testing.

In computer programming and software testing, smoke testing is a preliminary to further testing, which should reveal simple failures severe enough to reject a prospective software release.

Smoke testing is done by developers before the build is released or by testers before accepting a build for further testing.

In software engineering, a smoke test generally consists of a collection of tests that can be applied to a newly created or repaired computer program. Sometimes the tests are performed by the automated system that builds the final software. In this sense a smoke test is the process of validating code changes before the changes are checked into the larger product’s official source code collection. Next after code reviews, smoke testing is the most cost effective method for identifying and fixing defects in software; some even believe that it is the most effective of all.

In software testing, a smoke test is a collection of written tests that are performed on a system prior to being accepted for further testing. This is also known as a build verification test. This is a "shallow and wide" approach to the application. The tester "touches" all areas of the application without getting too deep, looking for answers to basic questions like, "Can I launch the test item at all?", "Does it open to a window?", "Do the buttons on the window do things?". There is no need to get down to field validation or business flows. If you get a "No" answer to basic questions like these, then the application is so badly broken, there's effectively nothing there to allow further testing. These written tests can either be performed manually or using an automated tool. When automated tools are used, the tests are often initiated by the same process that generates the build itself.

This is sometimes referred to as 'rattle' testing - as in 'if I shake it does it rattle?'.

In computer programming and software testing, smoke testing is a preliminary to further testing, which should reveal simple failures severe enough to reject a prospective software release.

Smoke testing is done by developers before the build is released or by testers before accepting a build for further testing.

In software engineering, a smoke test generally consists of a collection of tests that can be applied to a newly created or repaired computer program. Sometimes the tests are performed by the automated system that builds the final software. In this sense a smoke test is the process of validating code changes before the changes are checked into the larger product’s official source code collection. Next after code reviews, smoke testing is the most cost effective method for identifying and fixing defects in software; some even believe that it is the most effective of all.

In software testing, a smoke test is a collection of written tests that are performed on a system prior to being accepted for further testing. This is also known as a build verification test. This is a "shallow and wide" approach to the application. The tester "touches" all areas of the application without getting too deep, looking for answers to basic questions like, "Can I launch the test item at all?", "Does it open to a window?", "Do the buttons on the window do things?". There is no need to get down to field validation or business flows. If you get a "No" answer to basic questions like these, then the application is so badly broken, there's effectively nothing there to allow further testing. These written tests can either be performed manually or using an automated tool. When automated tools are used, the tests are often initiated by the same process that generates the build itself.

This is sometimes referred to as 'rattle' testing - as in 'if I shake it does it rattle?'.

Wednesday, November 28, 2007

Add some colors to your source:

Here's a tip many people may not be aware of. When we are coding the programs we put a lot of comments to explain logic or functionality, instead of putting these comments in green color, generally we will prefer to put them in different colors. We can add some colors to our program by using simple Client Access keyboard mapping.

o Click on the "MAP" button.